Automating Library Upgrades: Reducing Manual Effort

At Halodoc, we continuously work to keep our Android platform secure, stable, and aligned with the rapidly evolving ecosystem. Staying up to date with third-party libraries is essential to ensure performance, reliability, and long-term maintainability.

As our codebase and dependency graph expanded, upgrading libraries became increasingly manual and error-prone. Each library upgrade demanded manual review of release notes and compatibility across modules, resulting in significant engineering effort.

Introduction

Traditionally, library upgrades in Android projects are performed manually by developers, who check for new versions, analyze release notes, and validate changes across modules. This approach is time-consuming, error-prone, and adds significant maintenance overhead.

We aimed to create an automated, reliable upgrade workflow that identifies new stable versions, highlights relevant changes, release notes, and risks for engineering review, and surfaces actionable guidance for the engineering team. Instead of treating library updates as one-off maintenance tasks, we approached them as proactive, trackable, and team-owned processes, making upgrades predictable, safe, and less dependent on manual intervention.

Quick Overview

Problem: Manual, spreadsheet-driven library upgrades across many Android modules.

Solution: Python + BuildSrc discovery, Gemini-based release-note analysis, CI/CD-driven MR creation.

Outcome: Upgrades become automated, auditable, reversible, and consistent across modules.

Scope: Android multi-module project using BuildSrc as the source of truth.

The Problem: Manual Library Upgrades and Module Management

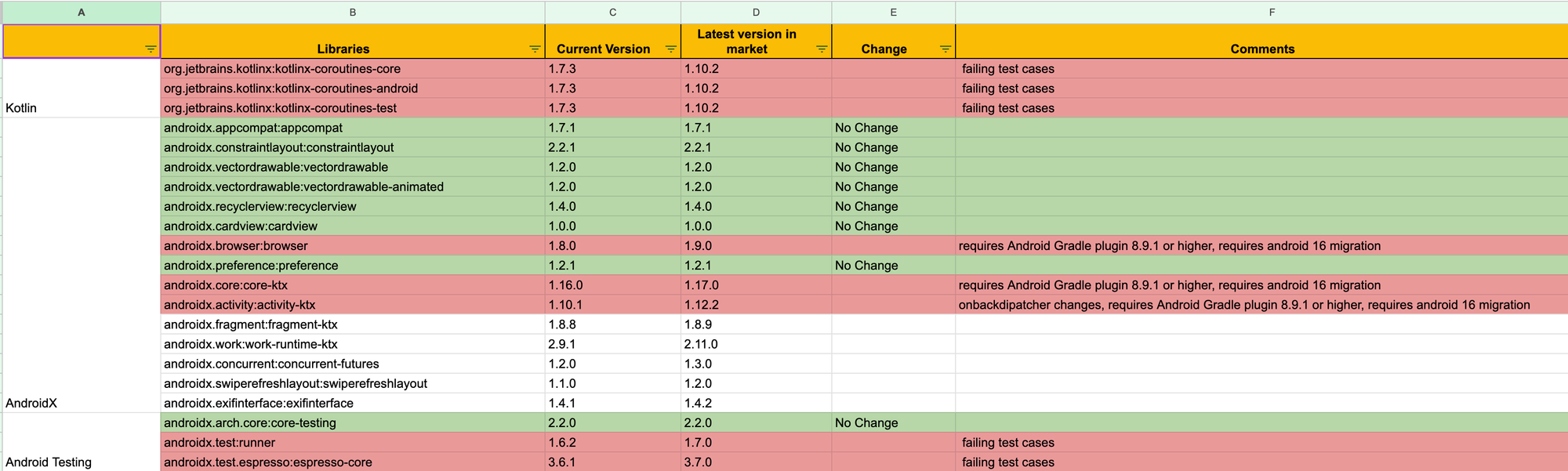

Before automation, library upgrades were a heavily manual process. Engineers had to track versions, release notes, and relevant changes across modules—essentially maintaining a single source-of-truth spreadsheet while validating each update manually.

Some of the pain points included:

- Searching for new versions and official release notes for each library.

- Reviewing release notes to identify major changes or potential impact.

- Updating the BuildSrc module, which contains library paths and versions.

- Updating each module one by one: creating a branch, syncing the project, updating the BuildSrc commit, and pushing to validate the build.

- Repeating this process across multiple modules makes it repetitive and error-prone.

- Handling failures: if an issue surfaced during validation, the library version had to be downgraded in BuildSrc, and the same module update and validation steps had to be repeated, further increasing manual effort.

Upgrading libraries felt like running a marathon, one module at a time, with no guarantee of consistency at the finish line.

The Solution: From Manual Upgrades to an Automated System

To address the operational costs and risk associated with manual dependency upgrades, we took a step back. We treated library upgrades as a platform-level problem, not a per-module task. Our primary objective was to design a system that could scale with the codebase, remain safe to run frequently, and reduce human involvement to review rather than execution.

Early on, we identified three guiding principles for the system:

- Centralization – Use

BuildSrcas the single source of truth for all dependency versions, ensuring consistency across modules and minimizing version drift. - Repeatability – Implement automation that runs reliably without manual bookkeeping, so upgrades can be applied uniformly.

- Reversibility – Design rollbacks so downgrades are as simple and deterministic as upgrades, avoiding repeated manual steps when issues arise.

At a high level, the solution uses automation and AI-assisted analysis to identify new library versions, assess their impact, and apply upgrades across modules. Rather than manually tracking release notes and change logs, the system surfaces upgrades with clear context—what changed, why it is safe (or risky), and the expected impact.

We stopped chasing versions and started reviewing decisions.

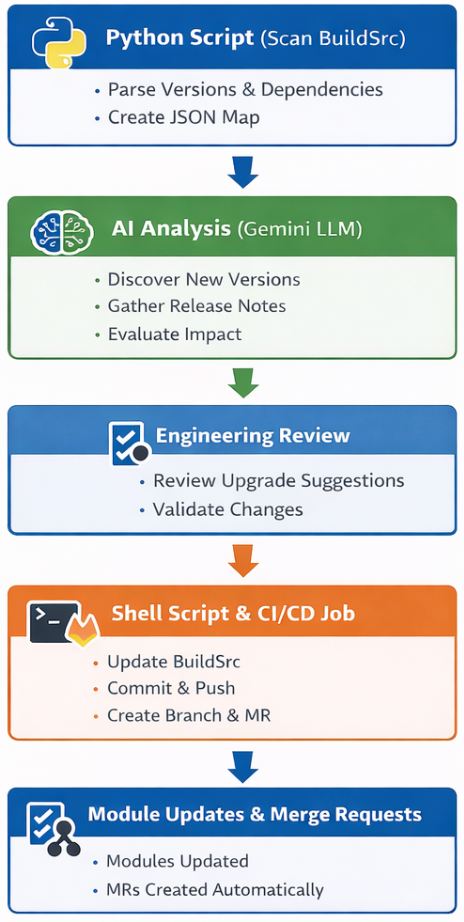

Automated Upgrade Architecture:

To streamline library upgrades and module updates, we designed an end-to-end automated pipeline that combines Python scripting, AI-assisted analysis, and CI/CD automation. The flow diagram below illustrates how dependency updates move from discovery to review and finally to propagation across modules.

How it works:

- A Python script scans all library versions and dependency files in BuildSrc, creating a JSON map of current versions, paths, and placeholders for upgrade details.

- AI assistance (Gemini LLM) discovers newer stable library versions, summarizing release notes from official sources (e.g., Maven, Android Developers), and evaluating potential impact and app size implications.

- Engineers review the recommendations via a Merge Request, validate context, and approve which upgrades should proceed.

- A shell script and CI/CD job then applies the BuildSrc updates, creates branches for all affected modules, commits the changes, pushes to GitLab, and automatically generates merge requests with all relevant upgrade details.

Library Discovery and Version Analysis:

BuildSrc Overview:

BuildSrc is a sub-module in the Android project that serves as the single source of truth for all library versions. It contains:

Versions.kt– defines version numbers for all artifacts.Dependencies.kt– defines library paths and maps them to the corresponding versions.

All other modules inject BuildSrc and use only the libraries they need. This ensures consistent versions across modules, simplifies dependency management, and allows automated upgrades to be applied safely.

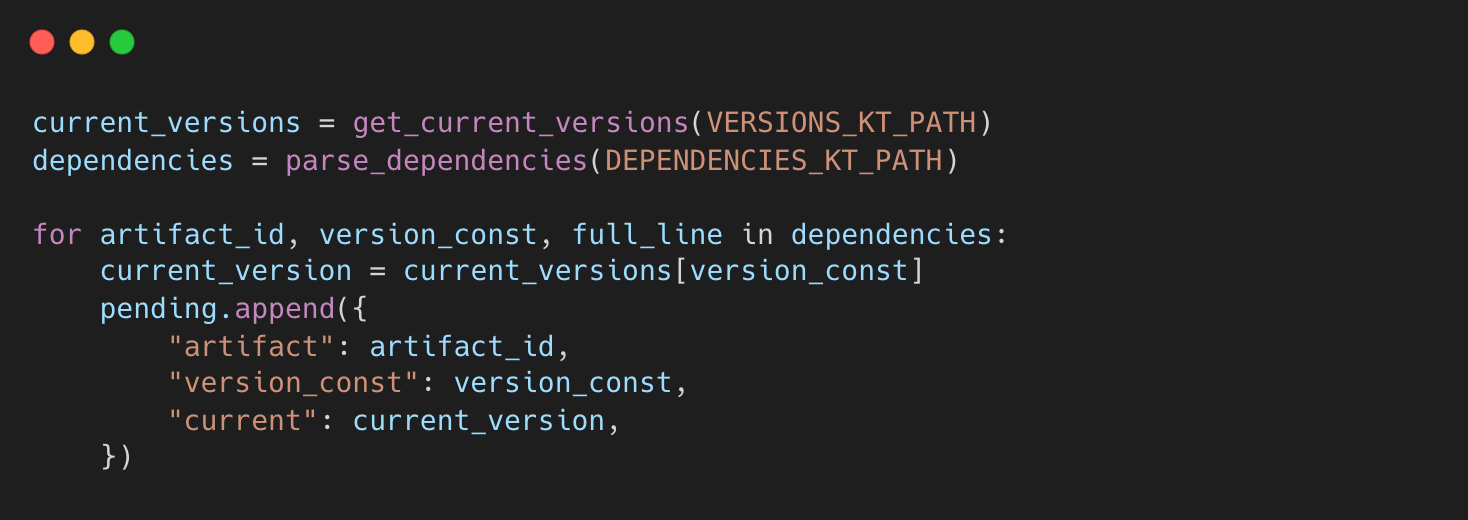

The process begins with a Python-based discovery script that scans the Android BuildSrc module

- Reads all current library versions from

Versions.kt. - Parses dependencies from

Dependencies.ktto understand which libraries are used. - Creates a list of libraries to check for upgrades.

- Filters duplicates, invalid entries, or artifacts with special version suffixes (for example, snapshot, alpha, or RC versions that should not be auto-upgraded).

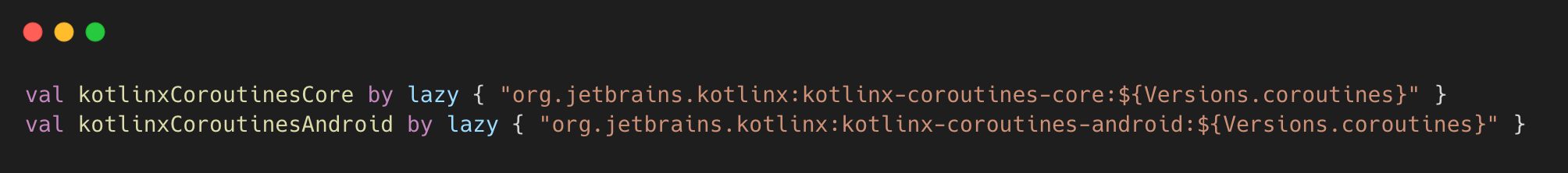

For example, in Dependencies.kt:

Here, both dependencies use the same version constant Versions.coroutines. The script detects such duplicates and processes only one entry.

This stage ensures that only valid, actionable dependencies are passed on for AI analysis, avoiding noise or errors in later steps.

AI-Assisted Release Note Analysis and Upgrade Recommendations:

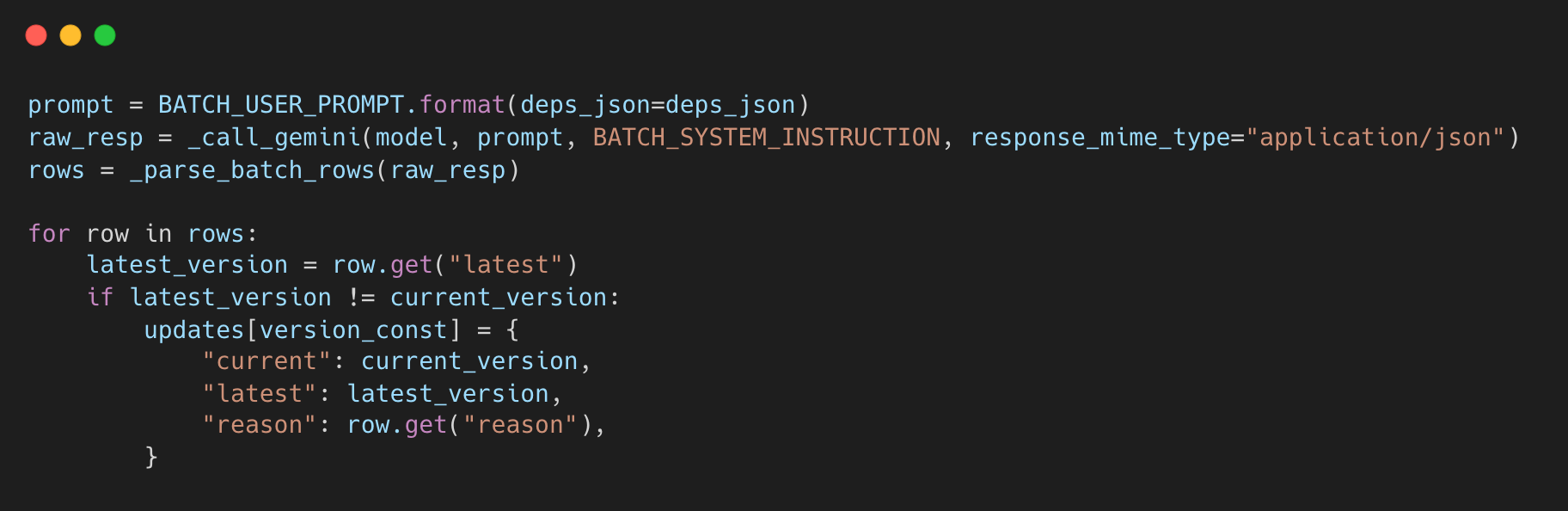

Once discovery is complete, the system uses Gemini LLM to evaluate each dependency in controlled batches, providing context-specific prompts that guide the model to identify which libraries require updates and which have optional updates available, along with relevant release note information. These prompts can be updated or improved in the future, making the system flexible and adaptable.

We chose Gemini 2.5 for this workflow because it provided more accurate results for our use case compared to GPT-4.0. In addition, Gemini 2.5 supports web search capabilities, allowing the model to reference official sources (like Maven or Android Developers) directly, improving the reliability and relevance of upgrade recommendations.

- Dependencies are sent to

Gemini LLM in controlled batches—currently 5 dependencies per batch—ensuring efficient processing without overloading the model. - For each dependency, the LLM identifies: the latest stable version, an update recommendation (required vs optional) based on release notes and changelogs, and supporting evidence.

- Only real updates (current ≠ latest) are recorded in JSON.

- Each update includes the reason for the upgrade and metadata for engineers to review.

With this approach, engineers no longer need to manually search release notes and versions. The AI provides a structured recommendation that engineers can validate and approve (or reject) via merge requests.

Automating Multi-Module Propagation:

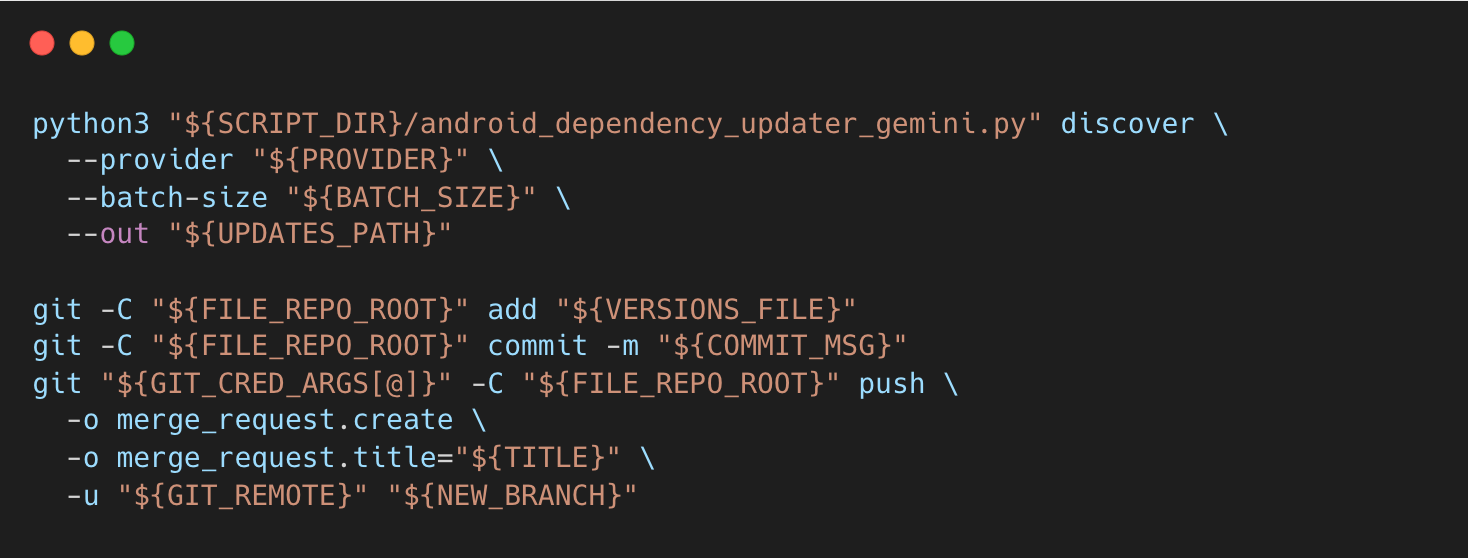

With upgrade decisions finalized, automation takes over. A lightweight shell script and CI/CD job consume the generated metadata and apply changes in a consistent, repeatable way.

- Runs the Python discovery script to fetch library updates.

- Stages and commits changes to

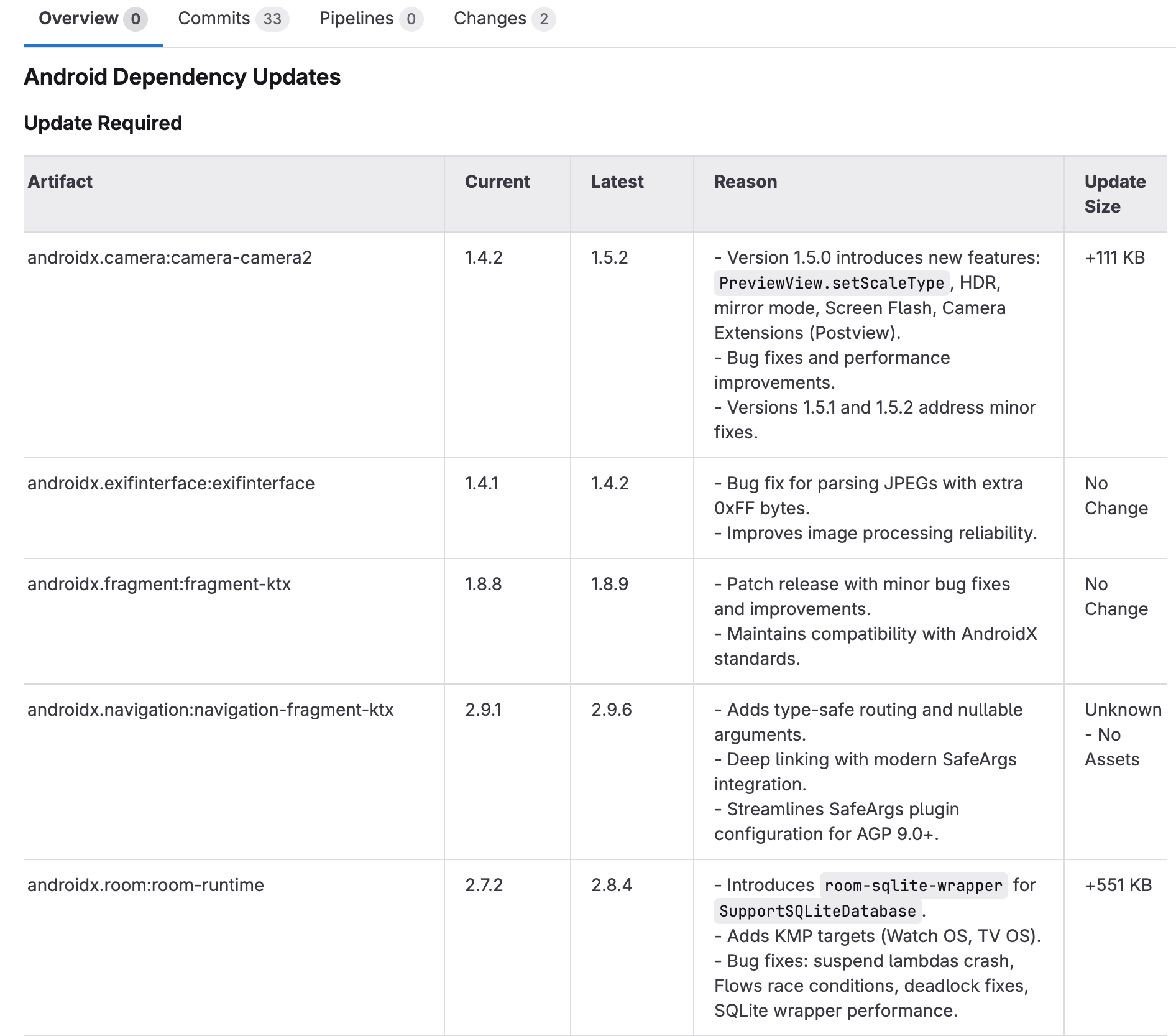

Versions.ktautomatically. - Pushes a branch and raises a Merge Request with all metadata, including: Current vs latest versions, Update reason, Size Impact, Evidence links (release notes, changelogs)

- The MR creation process ensures updates are centralized, auditable, and consistent across all modules.

- Rollbacks and downgrades use the same workflow: updating the BuildSrc commit triggers the same propagation pipeline, keeping all modules in sync and avoiding one-off manual fixes.

This step automates what used to be a multi-day, manual task, ensuring that all dependent modules are updated simultaneously, reducing human error and version drift.

Measurable Outcomes and Developer Impact

The automated upgrade system delivered measurable improvements in developer productivity and operational efficiency:

- Reduced manual upgrade effort per cycle by ~50%.

- Cut end-to-end upgrade rollout time from 4 days to 2 days.

- Increased the frequency of safe library upgrades from quarterly to monthly, without adding developer toil.

Challenges and How We Addressed Them

While building and running the automated upgrade system, several practical challenges influenced the final design:

1. Prompt Engineering and Deterministic LLM Output

One of the earliest challenges was making LLM output reliable enough for automation. Release notes are often ambiguous, and naïve prompts tend to over-interpret minor or documentation-only changes.

Key challenges included:

- Distinguishing high-impact changes from routine or optional updates.

- Avoiding false positives for low-risk releases.

- Enforcing a strict, CI-consumable JSON response format.

We addressed this through multiple iterations of prompt design, explicit constraints, example-driven guidance, and defensive validation, ensuring LLM responses were predictable, structured, and safe to use in CI/CD workflows.

2. Improving Merge Request Signal for Reviewers

Initial merge requests were verbose and difficult to review at scale, increasing cognitive load and slowing approvals. The challenge shifted from generating data to presenting only a meaningful signal.

We improved review efficiency by:

- Surfacing only actionable upgrades (current ≠ latest)

- Presenting changes in concise tabular summaries

- Embedding direct release notes and evidence links

- Enforcing consistent, CI-driven MR templates

This significantly reduced review time and improved reviewer confidence.

3. Scaling LLM Execution in CI Pipelines

Running LLM analysis sequentially across many dependencies quickly became a bottleneck, increasing CI runtimes and making the pipeline fragile to rate limits and transient failures.

Key challenges included:

- CI jobs are exceeding acceptable execution times.

- Rate limits or intermittent failures are blocking the entire pipeline.

- Inefficient retries when a single failure invalidated successful results.

We addressed this by processing dependencies in controlled batches with fault isolation and safe retries. Failed batches could be retried independently, while successful results were preserved through idempotent handling and bounded retry logic. This significantly improved pipeline performance, reliability, and cost predictability without sacrificing accuracy.

4. Balancing Automation with Human Ownership

A final challenge was defining the right boundary between automation and responsibility. Fully automated upgrades risked missing architectural context, while excessive manual gating reduced efficiency.

The final design automates discovery and propagation but keeps approval and ownership with engineers through merge requests, preserving both speed and accountability.

Conclusion

By automating library upgrades and multi-module propagation, we transformed what used to be a tedious process into a reliable, repeatable workflow. Python scripts and AI-assisted analysis handle dependency discovery, version checks, and update recommendations, while CI/CD pipelines apply updates consistently across all modules.

The system ensures that upgrades and downgrades follow the same automated workflow, keeping modules in sync, reducing conflicts, and providing full traceability. Planned enhancements—such as smarter batch handling, version-aware bumps, and dependency-aware execution—will make the workflow even smarter.

With automation in place, managing library versions at scale is no longer a manual chore, but a streamlined, reliable workflow that saves time and keeps the codebase healthy as it grows.

References

Join us

Scalability, reliability and maintainability are the three pillars that govern what we build at Halodoc Tech. We are actively looking for engineers at all levels and if solving hard problems with challenging requirements is your forte, please reach out to us with your resumé at careers.india@halodoc.com.

About Halodoc

Halodoc is the number one all-around healthcare application in Indonesia. Our mission is to simplify and deliver quality healthcare across Indonesia, from Sabang to Merauke.

Since 2016, Halodoc has been improving health literacy in Indonesia by providing user-friendly healthcare communication, education, and information (KIE). In parallel, our ecosystem has expanded to offer a range of services that facilitate convenient access to healthcare, starting with Homecare by Halodoc as a preventive care feature that allows users to conduct health tests privately and securely from the comfort of their homes; My Insurance, which allows users to access the benefits of cashless outpatient services in a more seamless way; Chat with Doctor, which allows users to consult with over 20,000 licensed physicians via chat, video or voice call; and Health Store features that allow users to purchase medicines, supplements and various health products from our network of over 4,900 trusted partner pharmacies. To deliver holistic health solutions in a fully digital way, Halodoc offers Digital Clinic services including Haloskin, a trusted dermatology care platform guided by experienced dermatologists.

We are proud to be trusted by global and regional investors, including the Bill & Melinda Gates Foundation, Singtel, UOB Ventures, Allianz, GoJek, Astra, Temasek, and many more. With over USD 100 million raised to date, including our recent Series D, our team is committed to building the best personalized healthcare solutions — and we remain steadfast in our journey to simplify healthcare for all Indonesians.