Best practices for optimising Mobile App Automation test

Automation testing helps us reduce the feedback cycle and bring faster validation for phases in the development of a product. In the process of helping customers to make optimal use of our product and simplify healthcare, Halodoc constantly builds features to solve customer pain points. This leads to expanding our automation test suite and consequently increases the execution time. Hence, optimization of our automation codebase is vital.

This blog talks about how we achieved the reduction of test execution time by 60% and have shared some best practices towards achieving this objective.

Automation framework at Halodoc, is a maven project with Behaviour Driven Development(BDD) approach. We are using Cucumber to create feature files and TestNG is used for assertions. The scripts are run using appium for mobile automation. We have CI/CD pipelines in place for scheduled runs of our automation suites(Smoke, Sanity, Regression, etc...).

Challenges with App Automation Execution

An increase in the number of features/scenarios leads to an increase in automation execution time. Before the optimization, our automated features were not independent of each other. Hence parallel execution of all features was not achievable. We have 30+ features where many duplicate scenarios/steps were present. UI interactions were more in places where they could be avoided by API calls.

Considering these challenges we identified a few steps(aka best practices) that should be taken care of:

- Refactor scenarios and feature files

- Use dedicated test data for every feature

- Do not create test data as part of your automation execution

- Replace static waits with explicit/fluent waits

- Use API calls to avoid unnecessary UI actions

- Follow strict Page Object Model

Refactor scenarios and feature files

We build new features on top of existing ones. Some features are exclusive while some are inclusive (interdependent).

- Maintained separate feature files which are exclusive to each other in terms of business flows. This made feature files more readable, easy to debug, and easy to maintain. This enabled the independent execution of feature files whenever required.

- Maintained dependent scenarios into a single or set of feature files. By avoiding the distribution of dependent scenarios across multiple feature files, the need of executing multiple feature files to test a feature module is avoided. In feature files, one will have functional, non-functional scenarios with corner/edge cases. Wherever the steps are repeated in scenarios(ex: navigating to the same page to perform the same tasks along with verifications), we combined them into a single scenario without compromising on validations. This ensured that the overall execution time of the test suite is considerably reduced.

- Distributed scenarios across feature files to reduce the duration of parallel execution. After following steps 1 and 2, if a feature file contains too many scenarios (and, a feature file takes too long to execute), feature file/s are broken into multiple feature files so that the duration of independent execution of each feature file is of the same order. For example, if feature file1 contains 40 scenarios which take 60min to execute, feature file2 contains 30 scenarios which take 30min, feature file1 is broken into 2 feature files with execution time 30min (approximately) so that, when all the 3 feature files are executed in parallel, the total execution time ends up to be ~30min.

Use dedicated test data for every feature

Mobile apps are used by a wide range of users with diverse device configurations and OS specifications. To test the device compatibility with the application it becomes necessary to test the app on them. Hence we would go for parallel execution on multiple devices. To achieve this, we would require to split the features in our suite into smaller groups taking into consideration that features are independent of each other.

For independent execution of features, it becomes important to maintain dedicated user data according to the feature scenarios. Maintaining dedicated test data helps in parallel execution without disturbing the execution of other features.

Test data is used in many test scripts across the test suite. Any change in test data, intentional or unintentional which are done manually or modified as part of other test scripts may impact the execution of tests negatively(ex, for card payment flow validation, Card number and Pin is required, changing which may impact our test). We can create a separate properties file and place this test data and can easily replace the Card number and Pin in a text file outside our test scripts.

Do not create test data as part of your automation execution

Each test case performs a specific task, some would require to create data as part of execution while others could be to validate it or perform certain actions. For example, let us consider below test scenarios of the Halodoc app.

- Validate that a new user (a patient) signs up on the Halodoc app for online consultation with a doctor.

- Validate order history (doctor appointments, pharmacy delivery orders, lab appointments, etc) of an existing user for a specific order.

In scenario 1, it is necessary to create a new user for every execution.

In scenario 2, instead of creating a new user and creating different orders to have order history, validate the existing user with the necessary order history without creating the user and orders. The focus of the scenario is to validate the order history and focusing on that, without creating test data as part of the test execution will reduce the overall test execution time.

Replace static waits with explicit/fluent waits

Static waits (Thread.sleep();) were used to wait for a system state when there is an uncertainty of the time it takes for a system state such as a dismissal of a pop-up, page load time, the lazy loads upon page scrolls.

For example,

This will halt the execution of the current thread for 5 seconds without any condition check. If the desired result is obtained after 5 seconds of sleep, the validation/assertions for an outcome may still fail.

We handled system notification issues with developer options, API calls, and explicit waits were used for page load and scroll issues.

The explicit wait is another dynamic WebDriver waits. Explicit/Fluent wait helps us to stop the execution of the script until a certain state(such as visibility of an element, the element is clickable, etc) is reached within the timeout specified. This will also ensure that a result/state is obtained within an acceptable period. An exception is thrown (such as, ElementNotVisibleException, ElementNotFoundException) if the condition is not met.

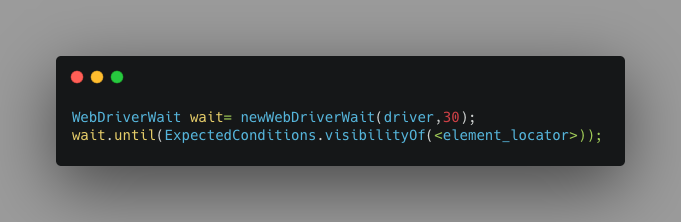

One can use these methods using the combination of classes WebDriverWait and ExpectedConditions.

This will halt the execution of the current thread for the visibility of the element. If the condition is not met by a specified timeout (30 seconds), an exception will be thrown.

Use API calls to avoid unnecessary UI actions:

- Test data can be created (without relying on UI automated script for data creation). This will reduce the execution time.

- The state of the system can be checked before any UI validations are carried out.

- To trigger any event such as, notifications, changing the status of a system that is external to the application under test. (ex: checking/updating balance before a wallet debit for a transaction, availability of a product before placing an order)

Follow strict Page Object Model:

In the Page Object Model(POM), we consider each app page of an application as a class file. Each class file will have only corresponding app page elements. If you will use POM then it will help in reducing code duplication and improves test case maintenance.

Advantages of using POM:

- Easy maintenance of code in a script

- Reusing code in any class

- Readability and Reliability of scripts

- Reduce duplicate elements/methods

Additional Improvements to consider

- Distribute scenarios across feature files to avoid duplication of scenarios and validations.

- Use soft assertions to cover independent validations in a test scenario (thereby combining multiple low-priority test cases into one).

- Strict code review process to be followed.

- Parallel execution of feature files across multiple devices.

Summary

Optimizing our automation codebase helps in improving execution time and it helps in identifying duplicate scenarios/steps. This blog mentions some of the few ways by which we achieved speed optimization of our mobile apps. Following these speed optimization improvements combined with parallel execution, we have achieved a reduction in execution time by over 60%.

Join us

We are always looking out for top engineering talent across all roles for our tech team. If challenging problems that drive big impact enthral you, do reach out to us at careers.india@halodoc.com

About Halodoc

Halodoc is the number 1 all around Healthcare application in Indonesia. Our mission is to simplify and bring quality healthcare across Indonesia, from Sabang to Merauke. We connect 20,000+ doctors with patients in need through our Tele-consultation service. We partner with 3500+ pharmacies in 100+ cities to bring medicine to your doorstep. We've also partnered with Indonesia's largest lab provider to provide lab home services, and to top it off we have recently launched a premium appointment service that partners with 500+ hospitals that allow patients to book a doctor appointment inside our application. We are extremely fortunate to be trusted by our investors, such as the Bill & Melinda Gates Foundation, Singtel, UOB Ventures, Allianz, GoJek, Astra, Temasek and many more. We recently closed our Series C round and In total have raised around USD$180 million for our mission. Our team works tirelessly to make sure that we create the best healthcare solution personalised for all of our patient's needs, and are continuously on a path to simplify healthcare for Indonesia.