Vault - High Availability and Scalability

News about data breaches, leaked customer information and stolen passwords for critical infrastructure are becoming very common. Many of these incidents seem to be related to mismanagement of credentials, unencrypted passwords, secrets being pushed to git repositories or secrets being hard coded within the application, leaving no room for rotation.

This has led to increasing demand for Secrets Management tools like

- AWS Secrets Manager

- HashiCorp Vault

- Kubernetes Secrets

- AWS Parameter Store

- Confidant

At Halodoc, we analyzed various tools mentioned above and finally decided to move ahead with Hashicorp Vault due to multiple features it offers.

Hashicorp Vault

Hashicorp Vault is an open-source tool that provides a secure, reliable way to store and distribute secrets like API keys, access tokens and passwords. Software like Vault are critically important when deploying applications that require the use of secrets or sensitive data. Vault provides high-level policy management, secret leasing, audit logging, and automatic revocation to protect sensitive information using UI, CLI, or HTTP API. All the secrets stored in it have an associated lease to enable key usage auditing, perform key rolling and ensure automatic revocation. It provides multiple revocation mechanisms to give operators a clear “break glass” procedure in case of a potential compromise. It consists of a unified interface to store secrets while providing tight access control and recording a detailed audit log. It can be deployed to practically any environment and does not require any special hardware such as physical HSMs (Hardware Security Modules).

High Availability

Vault can run in a high availability (HA) mode to protect against outages by running multiple Vault servers. Vault is typically bound by the IO limits of the storage backend rather than the compute requirements. Certain storage backends, such as Consul, provide additional coordination functions that enable Vault to run in an HA configuration while others provide a more robust backup and restoration process.

When running in HA mode, Vault servers have two additional states: active and standby. Within a Vault cluster, only a single instance will be active and handling all requests (reads and writes) and all standby instances redirect requests to the active instance.

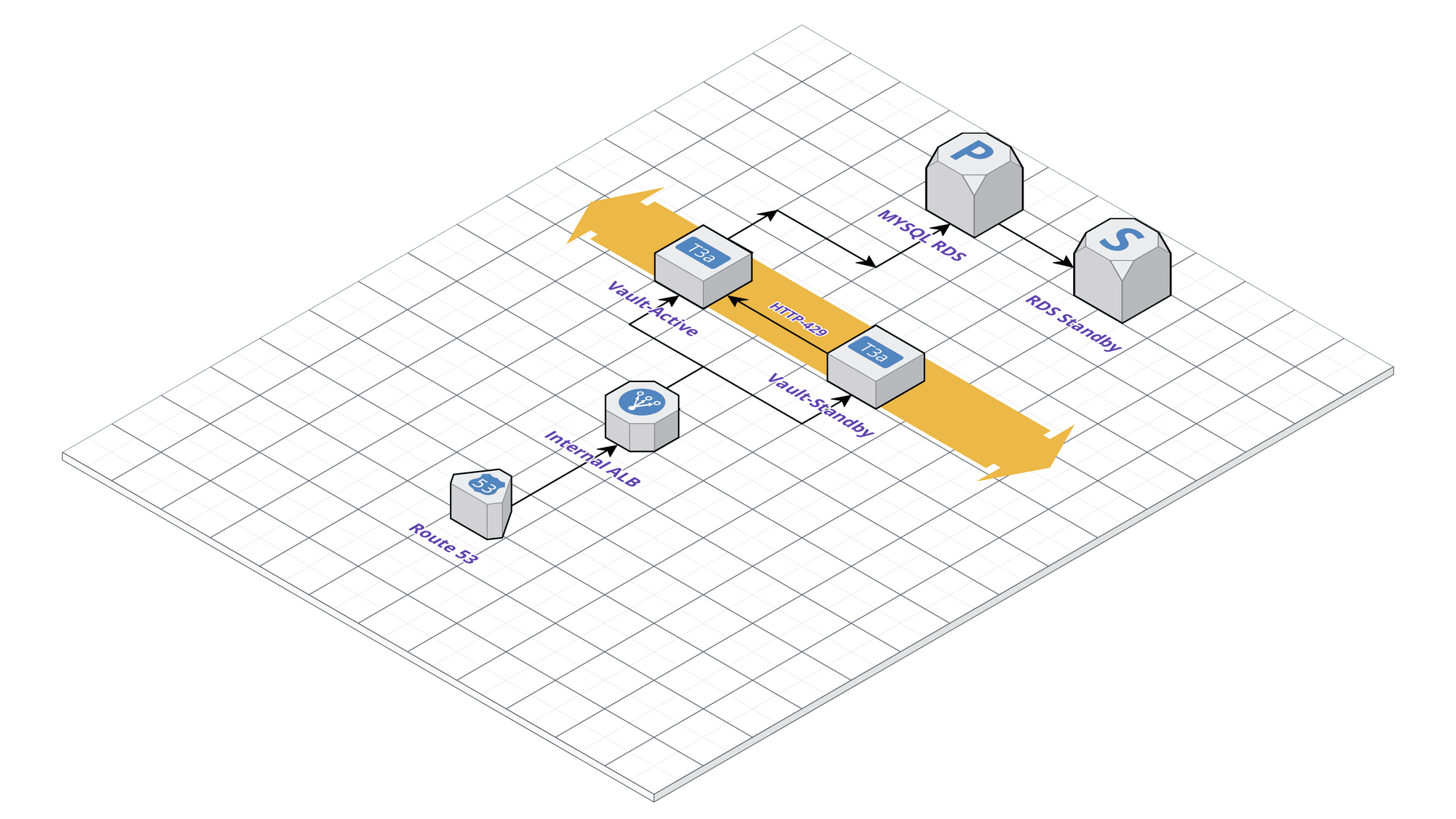

Architecture

Cluster Design Considerations

The primary design goal is to the make the Vault highly available (HA) and minimize the downtime.

We started with a small proof of concept using Vault in development mode and executed on the local machine, in order to understand how Vault works.

Running Vault in HA mode requires a storage backend and Hashicorp recommends running a multi-node cluster of Consul. But we did not want to bear the pain of managing the Consul cluster; we wanted a managed and generic storage backend solution.

We decided to use the MySQL(RDS) database for backend as we wanted a generic backend solution that will be available in any cloud, enabling migration to another cloud seamlessly in the future.

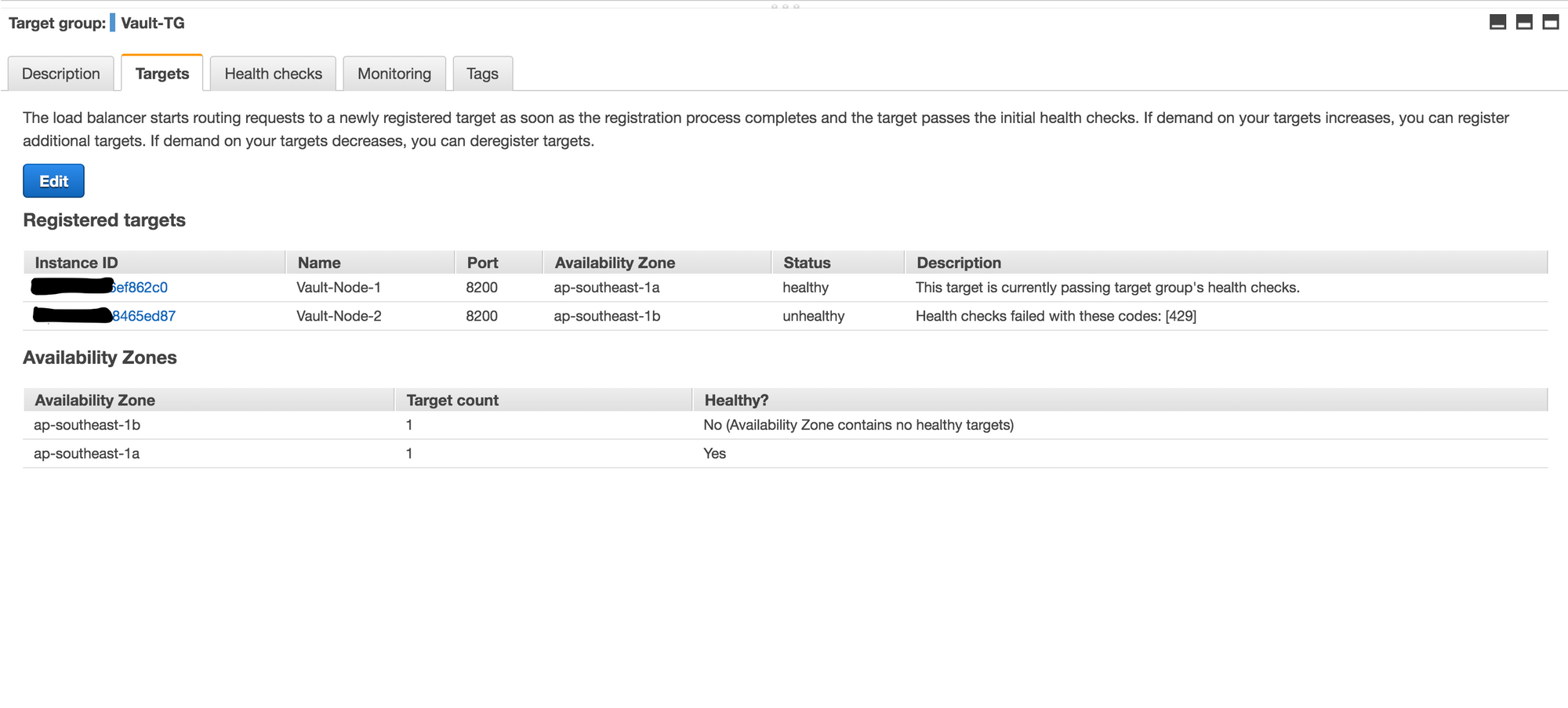

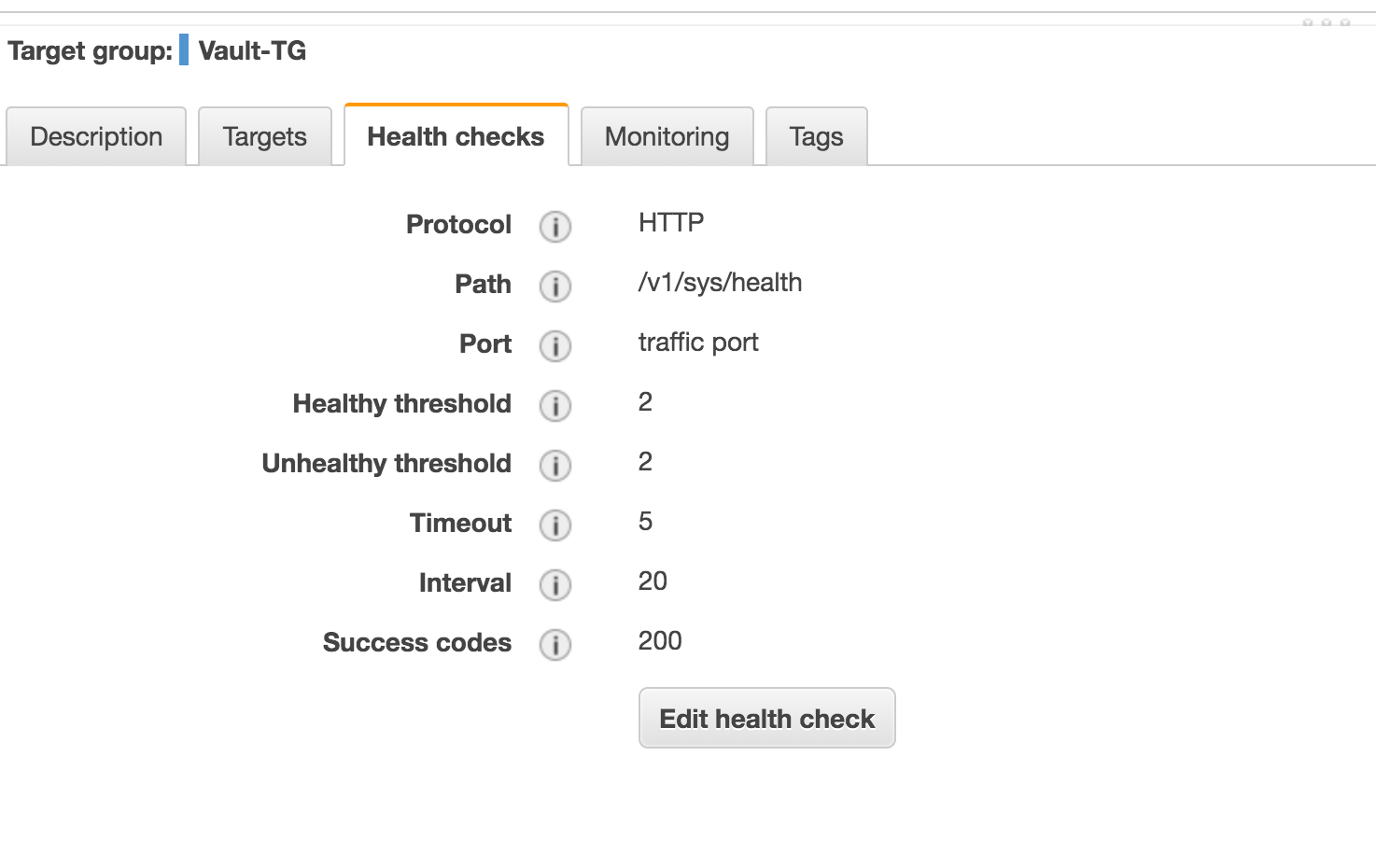

For the final setup, we decided to use EC2 instances behind an internal ALB. With this setup the ALB sees the active node as up and the inactive one as down (mentioned in the diagram HTTP status 429 when unsealed); which fits perfectly, since requests will be routed only to the active Vault instance. The HA in Vault, in our case, is intended to offer high availability and not to increase capacity. By this design, one Vault instance delivers all requests while the rest are hot replicas. If the active node fails, one of the standby nodes becomes automatically active.

Implementation

Primary Node:

ui = true

listener "tcp" {

address = "0.0.0.0:8200"

tls_disable = "true"

}

storage "mysql" {

ha_enabled = "true"

address = "vault-XXXXXXXXX.ap-southeast-1.rds.amazonaws.com:3306"

username = "user"

password = "password"

database = "vault"

redirect_addr = "http://vault.example.com"

api_addr = "http://vault.example.com"

cluster_addr = "http://vault-internal.*com.:8201"

}

Secondary Node:

ui = true

listener "tcp" {

address = "0.0.0.0:8200"

tls_disable = "true"

}

storage "mysql" {

ha_enabled = "true"

address = "vault-XXXXXXXXX.ap-southeast-1.rds.amazonaws.com:3306"

username = "user"

password = "password"

database = "vault"

redirect_addr = "http://vault.example.com"

api_addr = "http://vault.example.com"

cluster_addr = "http://vault-internal.*com.:8201"

}

Target Group:

Health Check:

Scalability

As we onboard more teams and applications to Vault, the usage is going to go up and hence we have to figure out how to scale Vault for the same.

We learned that horizontal scaling of the Vault is not really a solution and one would end up adding multiple standby nodes in the cluster. Also, we can not use standby nodes for read-only purposes as the feature is only available in the Vault Enterprise edition.

Since Vault is typically bound by the IO limits of the storage backend rather than the compute requirements, we need to think through how to scale up the storage backend because every request, for the most part, will end up hitting the storage backend.

As we are using MySQL RDS in Multi-AZ mode, we are assured about the backend scalability. Additionally, we can scale up Vault nodes with more open files, and more memory so they can handle more requests.

Right-sizing

At Halodoc, we have 50+ microservices running in production. The microservices communicate with each other in event-driven fashion using AWS SNS and Lambda. Given that, we have 30+ lambda functions which would be hitting the vault cluster concurrently to get the credentials with 10K RPM.

Since horizontal scalability in Vault is not possible, it is inevitable for us to come up with the right-sizing of Vault nodes which will support almost 20k transactions concurrently. (At least 2 times of current workload)

We were also curious to find what instance type supports how many concurrent transactions. So, we decided to analyze a single Vault node capability by performing load testing on the Vault cluster.

We started load testing with t2.small instance and below were the findings.

t2.small:

We started with 1k request with concurrent sessions of 1k and it went without any issue.

root@Jmeter-test-server:/home/ubuntu# ab -n1000 -c1000 -H "X-Vault-Token: ***********" https://example.com/v1/kv/data/stage/test

This is ApacheBench, Version 2.3 <$Revision: 1807734 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Concurrency Level: 1000

Time taken for tests: 2.421 seconds

Complete requests: 1000

Failed requests: 0

Total transferred: 848000 bytes

HTML transferred: 695000 bytes

Requests per second: 413.08 [#/sec] (mean)

Time per request: 2420.823 [ms] (mean)

Time per request: 2.421 [ms] (mean, across all concurrent requests)

Transfer rate: 342.08 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 5 810 140.6 817 936

Processing: 106 753 431.3 631 1504

Waiting: 106 753 431.3 630 1504

Total: 118 1563 524.4 1470 2394

Incrementally, we started increasing the connections along with concurrent sessions to check for the performance.

We started getting some failures the moment we reach 4K requests with a concurrency level of 4k.

root@Jmeter-test-server:/home/ubuntu# ab -n4000 -c4000 -H "X-Vault-Token: ******" https://example.com/v1/kv/data/stage/test

This is ApacheBench, Version 2.3 <$Revision: 1807734 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Concurrency Level: 4000

Time taken for tests: 13.670 seconds

Complete requests: 4000

Failed requests: 116

(Connect: 0, Receive: 0, Length: 116, Exceptions: 0)

Non-2xx responses: 116

Total transferred: 3328780 bytes

HTML transferred: 2716548 bytes

Requests per second: 292.62 [#/sec] (mean)

Time per request: 13669.687 [ms] (mean)

Time per request: 3.417 [ms] (mean, across all concurrent requests)

Transfer rate: 237.81 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 5 2946 531.1 2991 3425

Processing: 65 3914 2837.3 3425 10424

Waiting: 65 3914 2837.3 3425 10424

Total: 86 6860 3098.3 6384 13575

Percentage of the requests served within a certain time (ms)

50% 6384

66% 7455

75% 10464

80% 10779

90% 11274

95% 11571

98% 13492

99% 13541

100% 13575 (longest request)

Observation:

From the above exercise, we observed that the t2.small instance is not sufficient to handle 20k requests concurrently.

t2.medium:

We carried the same load testing on t2.medium size instance with some tuning in the system configuration and found the below details.

root@Jmeter-test-server:/home/ubuntu# ab -n20000 -c20000 -H "X-Vault-Token: *******" https://example.com/v1/kv/data/prod/test

This is ApacheBench, Version 2.3 <$Revision: 1807734 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Concurrency Level: 20000

Time taken for tests: 57.060 seconds

Complete requests: 20000

Failed requests: 0

(Connect: 0, Receive: 0, Length: 6714, Exceptions: 0)

Total transferred: 12941698 bytes

HTML transferred: 9868420 bytes

Requests per second: 350.51 [#/sec] (mean)

Time per request: 57059.741 [ms] (mean)

Time per request: 2.853 [ms] (mean, across all concurrent requests)

Transfer rate: 221.49 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 13423 23495 2326.6 24425 25549

Processing: 1395 10077 4504.0 10911 30811

Waiting: 1395 10074 4503.1 10911 30811

Total: 19596 33572 4651.4 34200 54335

Percentage of the requests served within a certain time (ms)

50% 34200

66% 36299

75% 36776

80% 37437

90% 38912

95% 39735

98% 41028

99% 48842

100% 54335 (longest request)

Observation:

We observed that the t2.medium instance is capable of supporting 20K requests with a concurrency of 20K with acceptable response time. Also we have observed the CPU, Memory and system load parameters are well within the limits.

Conclusion

With the following exercise, we have identified that Hashicorp Vault is the most ideal for our use cases and also at arrived the HA set up with right sizing (for our usage) for the same by performing a proof of concept and a load testing. We are excited to have taken the step forward in the right direction with this and hoping for more improvements in the future.

Join us?

We are always looking out to hire for all roles in our tech team. If challenging problems that drive big impact enthral you, do reach out to us at careers.india@halodoc.com

About Halodoc

Halodoc is the Number #1 all around Healthcare application in Indonesia. Our mission is to simplify and bring quality healthcare across Indonesia, from Sabang to Merauke.

We connect 20,000+ doctors with patients in need through our teleconsultation service, we partner with 1500+ pharmacies in 50 cities to bring medicine to your doorstep, we partner with Indonesia's largest lab provider to provide lab home services, and to top it off we have recently launched a premium appointment service that partners with 500+ hospitals that allows patients to book a doctor appointment inside our application.

We are extremely fortunate to be trusted by our investors, such as the Bill & Melinda Gates foundation, Singtel, UOB Ventures, Allianz, Gojek and many more. We recently closed our Series B round and In total have raised USD$100million for our mission.

Our team work tirelessly to make sure that we create the best healthcare solution personalized for all of our patient's needs, and are continuously on a path to simplify healthcare for Indonesia.