Kafka Integration Test Automation

Apache Kafka is a distributed event streaming platform used by organisations to build real-time data pipelines and streaming applications. Integrating Kafka for asynchronous communication between different systems and applications is a common requirement in most organisations. In this blog, we will explore why and how to automate Kafka integration testing using Dockerized Kafka and WireMock.

Before we go into "How to do Kafka integration testing", let’s understand why we need this.

Why Kafka Integration testing

Integration testing of Kafka is necessary to ensure that the Kafka system and its components work seamlessly with other systems and applications in the overall software ecosystem. Here are some reasons why integration testing of Kafka is needed:

- Data consistency and correctness: Integration testing helps verify that the data produced by Kafka producers and consumed by Kafka consumers is consistent, correct, and meets the expected requirements. It ensures that data messages are properly published, delivered, and consumed without loss or corruption.

- End-to-end flow validation: Kafka integration testing allows you to validate the end-to-end flow of data within the system. It ensures that data messages are successfully transmitted from producers to Kafka topics, consumed by consumers, and processed or stored in downstream systems or applications as intended.

- Compatibility and interoperability: Integration testing helps identify any compatibility issues or conflicts between Kafka and other components of the software ecosystem. It ensures that Kafka integrates smoothly with other systems, such as databases, analytics tools, or messaging systems, and that they can exchange data effectively.

- Error handling and fault tolerance: Integration testing allows you to assess how the Application handles various error scenarios, such as network failures, message duplication, or consumer failures. It helps ensure that, the application implements appropriate error-handling mechanisms, such as retries, dead-letter queues, or fault tolerance, to maintain system reliability and data integrity.

- Performance and scalability: Integration testing helps evaluate the performance and scalability of Kafka under real-world conditions. It allows you to simulate and measure the behaviour of Kafka in scenarios involving high data loads, concurrent producers and consumers, and varying network conditions to ensure that the system can handle the expected workload efficiently.

Why did we implement Kafka integration testing at Halodoc?

- Whenever our End to End automation is broken, it took a lot of time in finding out the root cause of the issue. Analysing whether the issue is at Publisher or the issue is at Consumer was challenging.

- Automating negative scenarios in End to End testing was too difficult. For example one of test case is ServiceA should not publish a message when its dependent ServiceB throws error.

- There were some scenarios where the publisher was pushing duplicate messages for the same event. To avoid these kinds of scenarios, we are checking only one message is received for one event.

- Automating all the different consumer scenarios was creating a bulky test case, which was more flaky. For eg. If serviceA produces message then ServiceB , ServiceC and ServiceD were consuming these messages. Validating all these consumer scenarios in one Test case was tedious and flaky.

Automating Kafka Integration Testing

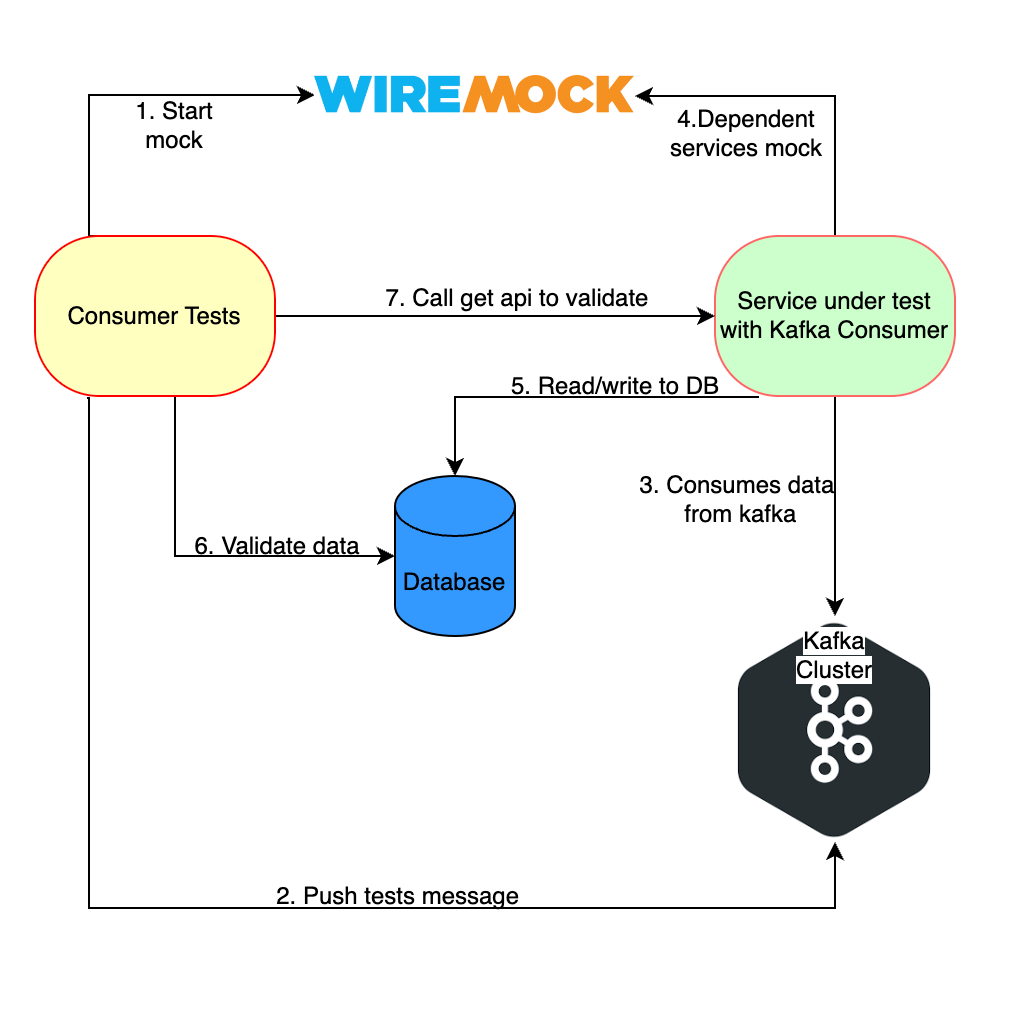

Integration testing of Kafka involves, testing the interaction between Kafka and other systems. This includes testing the "message production" and "message consumption and processing". To automate this process, we can use Dockerized Kafka to spin up a Kafka broker, a Zookeeper instance, and a WireMock server to simulate any 3rd party dependent external services such as authorisation server or payment gateway.

Here are the basic steps to perform Kafka integration testing using Docker:

- Install Docker: First, you need to install Docker on your machine. You can download and install Docker from the official Docker website.

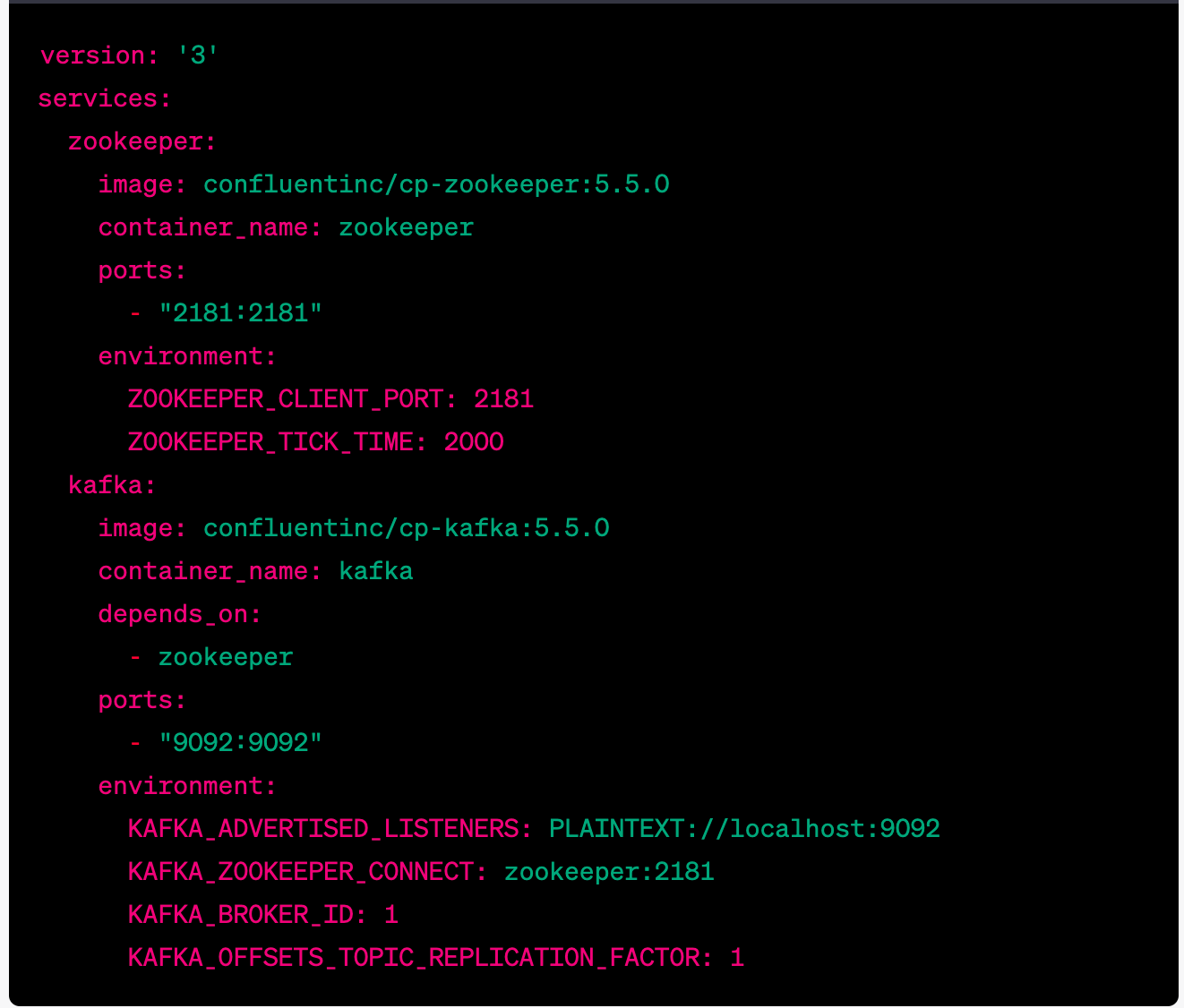

- Set up a Dockerized Kafka cluster: You can set up a Kafka cluster using Docker Compose. Docker Compose is a tool for defining and running multi-container Docker applications. You can define the Kafka cluster in a YAML file and run it with a single command. Here is an example YAML file for a single-node Kafka cluster:

You can save this YAML file as docker-compose.yml and run the following command to start the Kafka cluster:

This will start a Kafka cluster with one broker and one ZooKeeper instance.

- Mocking dependent server: We can use the WireMock libraries for mocking any dependent server response.

WireMock server is an open-source, stubbing and mocking tool for HTTP-based services. It allows developers to simulate and control the behaviour of external APIs or services during testing or development. WireMock provides a standalone server that can be configured to act as a mock server, mimicking the responses of the actual services.

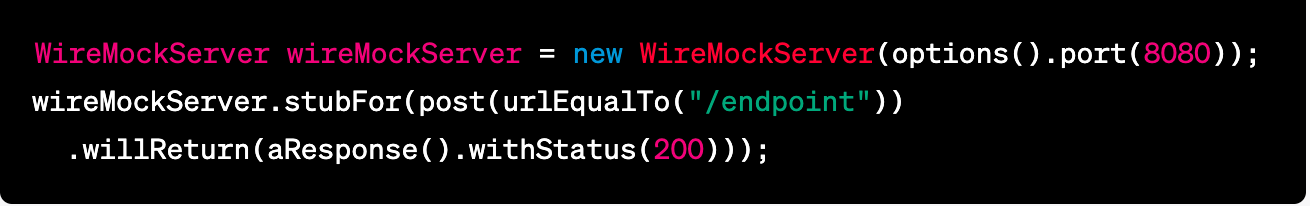

We can create a WireMock server to simulate the HTTP endpoint. We can define the expected behaviour of the server using the WireMock API. For example, we can define a stub that expects a POST request to the endpoint and returns a 200 OK response:

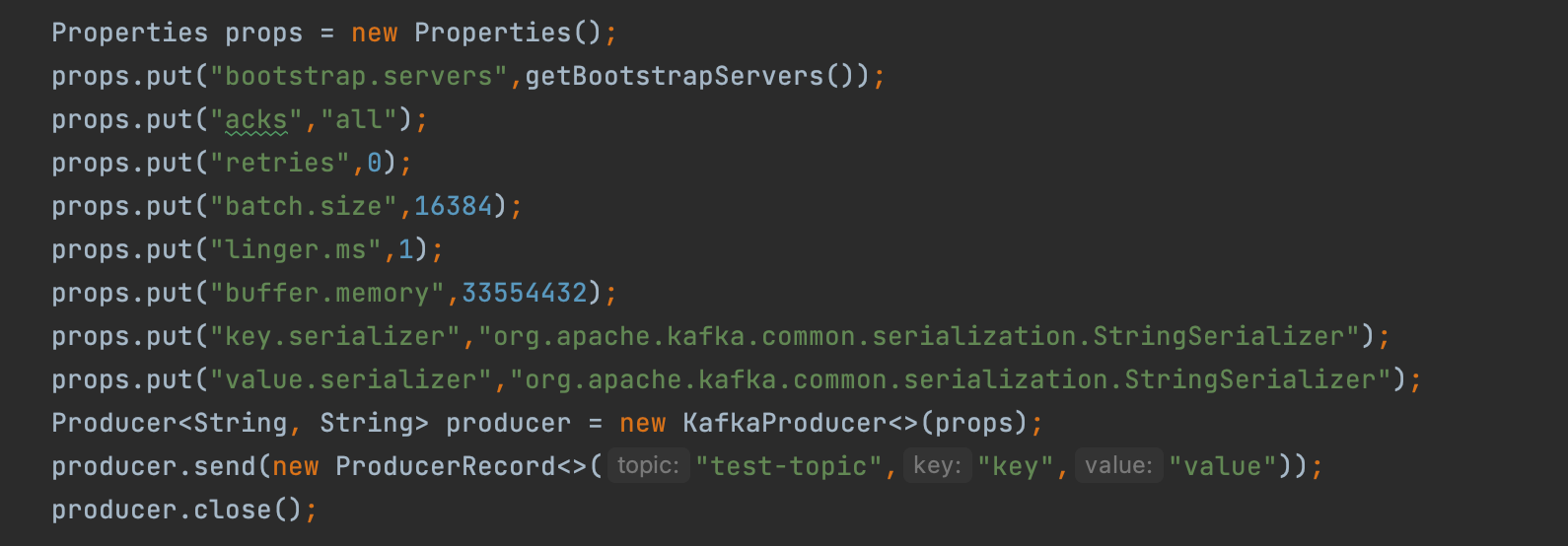

Write Consumer flow integration tests: Once we have our Kafka, Zookeeper, and WireMock instances running, we can start our test by creating a Kafka producer and sending messages to the Kafka topic:

1. Create a Test producer and Push messages to the same topic which is consumed by service under test

2. Wait for some time so that the service under test process this message

3. Validate the application's functionality. For eg. validate data in DB , validate some 3rd API is called.

Consumer flows tests

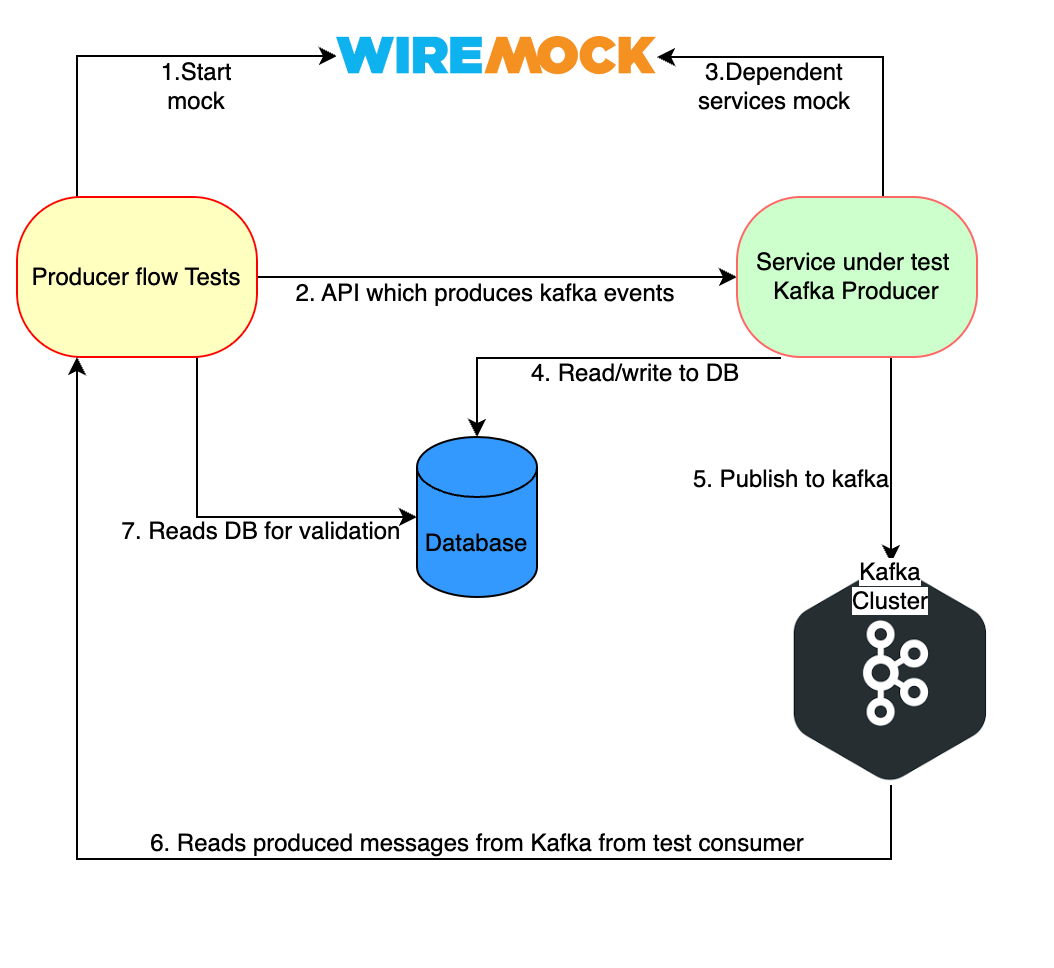

Write Producer flow integration tests: Once we have our Kafka, Zookeeper, and WireMock instances running, we can start testing the producer flows of the Application under test.

- Create a test consumer and listen to the topic for which, the application produces the data

- Invoke the API which produces the data to the topic

- Validate the consumed message in the test consumer

Producer flows tests

Run integration tests: To run your integration tests, you need to start the Kafka cluster using Docker Compose and then run your tests. You can use a testing framework, such as JUnit or TestNG, to run your tests.

Steps to be followed to avoid common errors

- Make sure the port used for the Kafka is open and reachable in the host machine where Kafka Docker is running.

- Make sure that Application and Kafka hosts are reachable from the machine where tests are running.

- Make sure that all the dependent services endpoints are defined properly in Mock Services.

- Reset the Mocks after each test.

Impacts After Integration tests:

- Enhanced data integrity: By validating data flow within the system, integration testing helped us to maintain data integrity and consistency, by ensuring that the data being processed or stored is accurate and reliable

- Improved fault tolerance and error handling: By doing Kafka integration automation testing, we were able to test fault tolerance mechanisms, such as retries, error queues, or failovers. Integration testing helped us to improve the system's resilience to failures and enhanced its overall error-handling capabilities.

- Increased confidence in deployments: After Kafka integration automation testing, it is assuring that Kafka and its integrations work correctly in the target environment. It boosts confidence by knowing that the integration points have been validated and the system will function as expected.

- Reduced release time: Since we have integrated this with our build pipeline, we were able to run this automation early in the development life cycle.This has significantly reduced the time associated with debugging and fixing issues later in the process.

Conclusion

In this blog, we explored how to automate Kafka integration testing using Dockerized Kafka and WireMock. By using Dockerized Kafka to spin up Kafka and Zookeeper instances and WireMock to simulate external systems, we can easily test the interaction between Kafka and other systems. This approach can save release time and reduce the risk of bugs and errors in production.

Sharing a few of the reference links for a better understanding of the above topics.

https://kafka.apache.org/documentation/#api

https://docs.docker.com/get-started/

https://wiremock.org/docs/

Join us

Scalability, reliability and maintainability are the three pillars that govern what we build at Halodoc Tech. We are actively looking for engineers at all levels and if solving hard problems with challenging requirements is your forte, please reach out to us with your resumé at careers.india@halodoc.com.

About Halodoc

Halodoc is the number 1 all around Healthcare application in Indonesia. Our mission is to simplify and bring quality healthcare across Indonesia, from Sabang to Merauke. We connect 20,000+ doctors with patients in need through our Tele-consultation service. We partner with 3500+ pharmacies in 100+ cities to bring medicine to your doorstep. We've also partnered with Indonesia's largest lab provider to provide lab home services, and to top it off we have recently launched a premium appointment service that partners with 500+ hospitals that allow patients to book a doctor appointment inside our application. We are extremely fortunate to be trusted by our investors, such as the Bill & Melinda Gates Foundation, Singtel, UOB Ventures, Allianz, GoJek, Astra, Temasek and many more. We recently closed our Series C round and In total have raised around USD$180 million for our mission. Our team works tirelessly to make sure that we create the best healthcare solution personalised for all of our patient's needs, and are continuously on a path to simplify healthcare for Indonesia.