Demystifying ETL Cross-job Dependencies with Apache Airflow

At Halodoc, we have always been committed to simplifying healthcare for our end users and our ultimate goal is to excel as a data-driven organization.

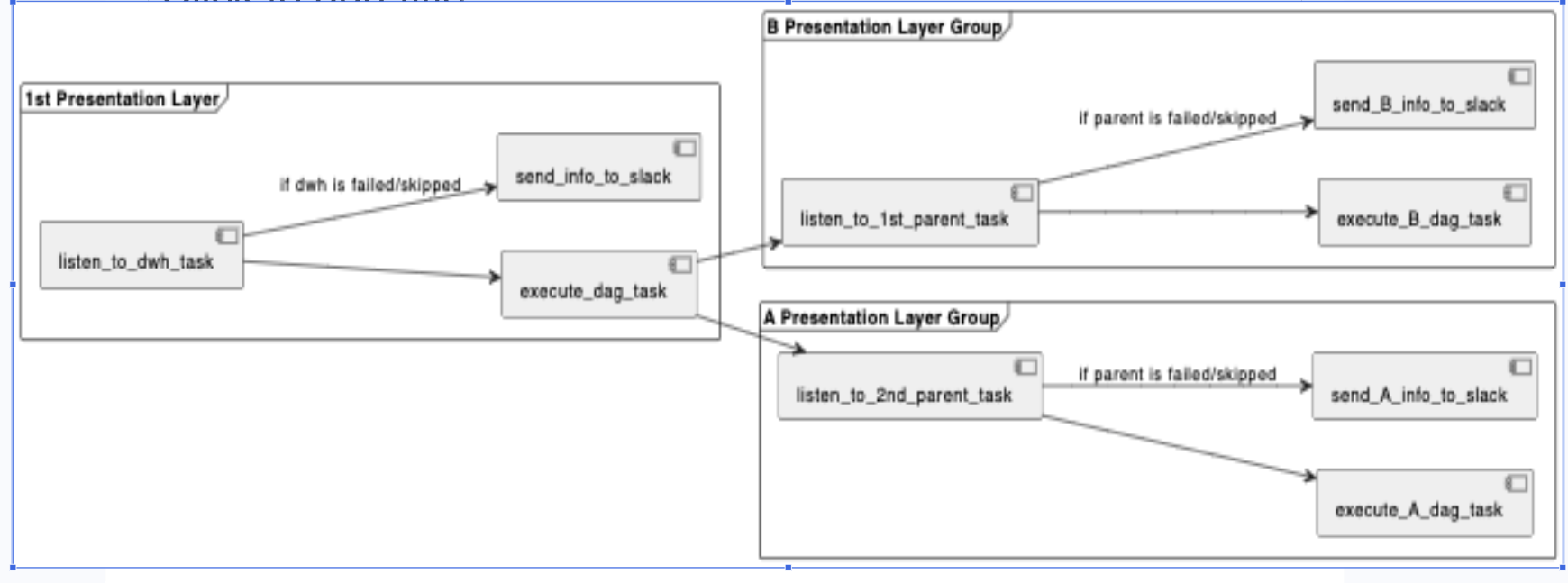

In the previous blog, we explained how to process the data model adopted at Halodoc in the process zone to the report zone(fact and dim) and Presentation layer zone(PL). Presentation layer tables were dependent either on the dimensions and facts table, on other presentation tables, or both. We used to call this an interdependency between tables in Halodoc's data warehouse or reporting layer. We used airflow as the job orchestrator. So, the schedule for all our tables was handled by airflow. Due to such interdependent tables, we observed scheduling issues listed below.

This led to two issues:

- The potential for a race condition to occur (e.g., the parent table running at the same time as the dependent table).

- Added complexity when building a presentation layer table, as we had to consider the execution time of the dependent table to determine when the job should run. This caused delays in data delivery to our customers; at the earliest, data was available around 8 AM in the morning for our stakeholders to use.

Therefore, we had developed a new feature called Cross Job Dependency, where the child job/DAG had to listen to the upstream job/parent job before starting to execute its job. We hoped that this blog would help engineers experiencing the issues described above.

Challenges

- The condition where interdependent tables were running at the same time. The table in the presentation layer that was dependent on another table in the presentation layer wasn't updated, causing discrepancies between tables.

- Incorrect schedule time settings existed as we couldn't predict when a dependent job would finish..

What do we need ?

To accommodate the multi-layered nature of presentation tables (that is, where one presentation layer can act as a source for another presentation layer), we included cross-job configuration within each presentation layer. To interact with the Job, the user needed to be mindful of the following aspects:

Firstly, understanding the layer on which the presentation layer stood—whether it was dependent on other presentation layers or solely on data warehouse tables. Secondly, considering the dependent tables of the presentation layer. It was highly encouraged to include only one parent table whenever possible, owing to the dependency's nature on task sensors.

If the child table was skipped, the same job would also send a Slack alert to inform the stakeholders that the said table wasn't updated.

What do we need to develop?

We used Airflow as the orchestrator for our data warehouse pipeline. Airflow jobs were represented with DAGs, which stand for Directed Acyclic Graphs—a conceptual representation of a series of activities. In the Halodoc data warehouse, all table presentation layers were managed by Airflow DAGs. To create the cross-job dependency feature, we leveraged the Airflow library and integrated it into an existing DAG.

Airflow.sensors.external_task and TriggerDagRun

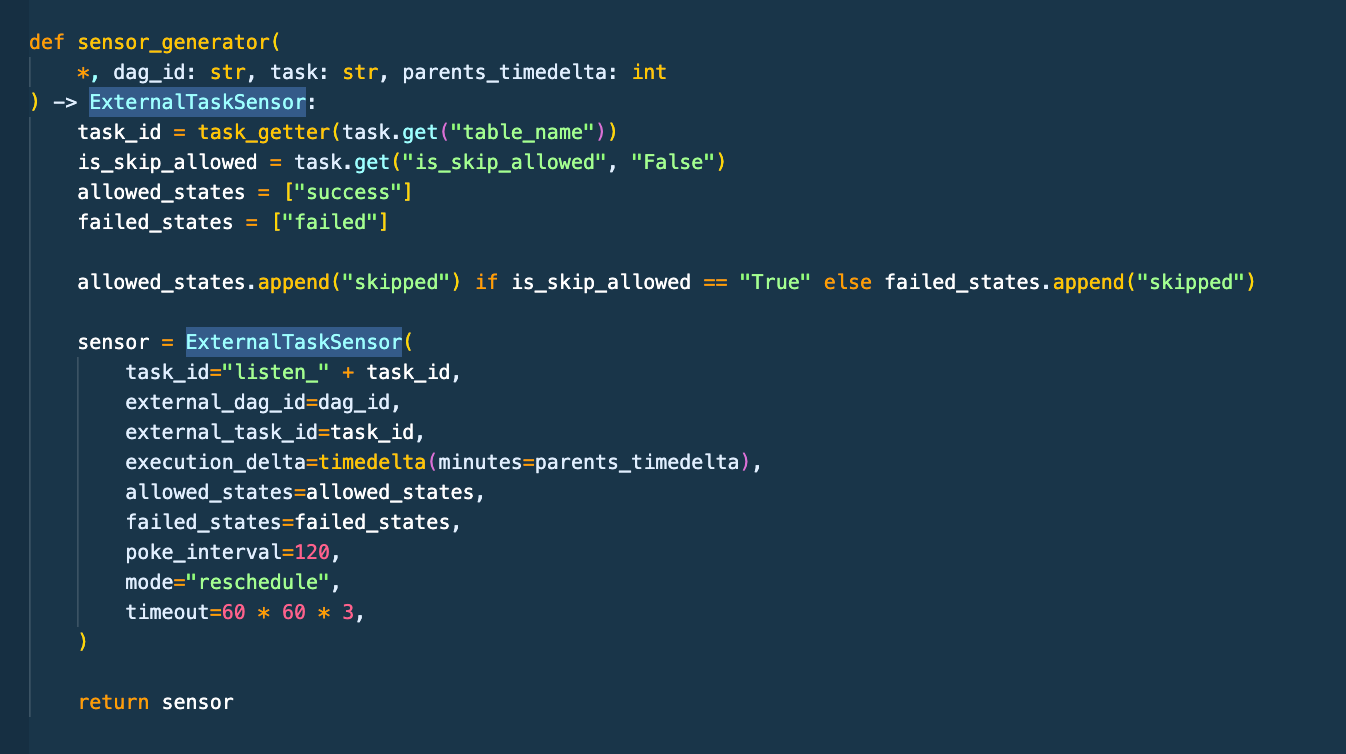

To handle the child table's dependency by monitoring the status of the parent job, we utilized the ExternalTaskSensor in Airflow. We referred to the job that triggers another job as the 'parent job'.

To execute or bypass the child table based on the return value of the preceding task sensor. If the check passes (i.e., the sensor returns true, indicating the successful completion of the parent job), only then is the child table job executed. Achieving this task can be done using TriggerDagRun..

Added variables to the table configuration

We had a table stored in MySQL. This table functioned to store all the variables taken by Airflow to execute each DAG. We stored variables such as schedule and target tables for Airflow to retrieve and run our pipeline. We added several variables to enable the 'cross-job' functionality within Airflow.sensors.external_task. These variables functioned to inform the Airflow DAGs about dependencies on other DAGs and tables.

Cross-job Dependencies

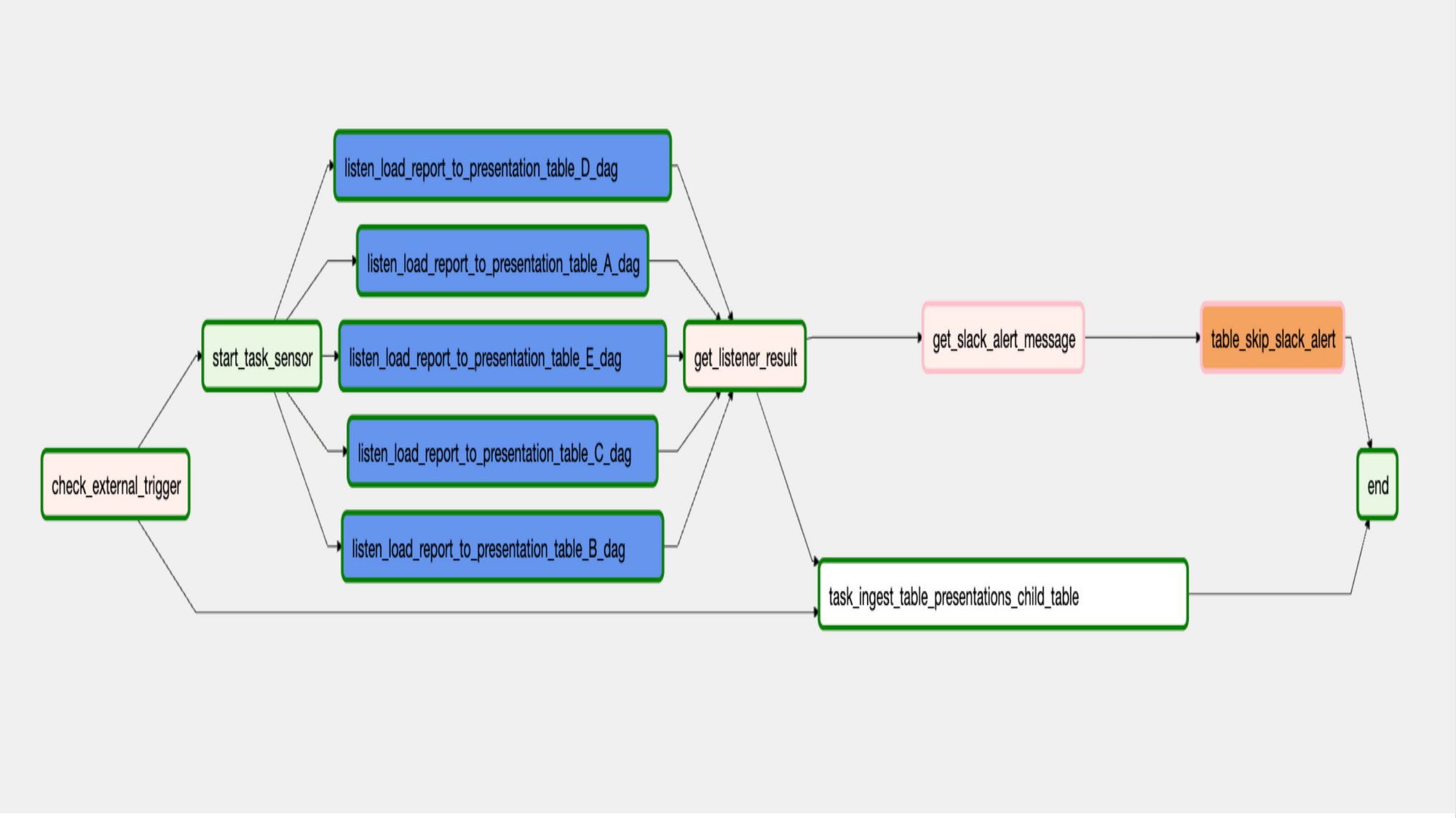

This was the process of how Airflow ran its DAGs with Cross-job dependencies.

- DAG for table_presentations_child_table running.

- Listen task sensor will check external sensor state (listen_load_report_to_presentation_table_A_dag and others).

- If the external sensor state is successful, it will execute task_ingest_table_presentations_child_table and table will update.

- If the external sensor fails we will get a Slack alert.

Conclusion

We managed to reduce the table delivery time from the latest at 8 AM Jakarta time to 4:15 Jakarta time for the deployed tables. This reduction allowed us to decrease the necessity of scheduling presentation layers while maintaining proper SLAs. Through this approach, we were able to ensure that by 5:30 AM Jakarta time, business users could either access the data or receive notifications in case of any issues. This approach also eliminated the condition where interdependent tables were running simultaneously (race condition).

Reference

- https://airflow.apache.org/docs/apache-airflow/stable/_api/airflow/sensors/external_task/index.html#module-airflow.sensors.external_task

- https://airflow.apache.org/docs/apache-airflow/stable/_api/airflow/operators/trigger_dagrun/index.html

- https://blogs.halodoc.io/data-model-adoption-at-halodoc/

- https://blogs.halodoc.io/datawarehouses-presentation-layer-in-halodoc/

Join us

Scalability, reliability and maintainability are the three pillars that govern what we build at Halodoc Tech. We are actively looking for engineers at all levels and if solving hard problems with challenging requirements is your forte, please reach out to us with your resumé at careers.india@halodoc.com.

About Halodoc

Halodoc is the number 1 all around Healthcare application in Indonesia. Our mission is to simplify and bring quality healthcare across Indonesia, from Sabang to Merauke. We connect 20,000+ doctors with patients in need through our Tele-consultation service. We partner with 3500+ pharmacies in 100+ cities to bring medicine to your doorstep. We've also partnered with Indonesia's largest lab provider to provide lab home services, and to top it off we have recently launched a premium appointment service that partners with 500+ hospitals that allow patients to book a doctor appointment inside our application. We are extremely fortunate to be trusted by our investors, such as the Bill & Melinda Gates Foundation, Singtel, UOB Ventures, Allianz, GoJek, Astra, Temasek and many more. We recently closed our Series C round and In total have raised around USD$180 million for our mission. Our team works tirelessly to make sure that we create the best healthcare solution personalised for all of our patient's needs, and are continuously on a path to simplify healthcare for Indonesia.